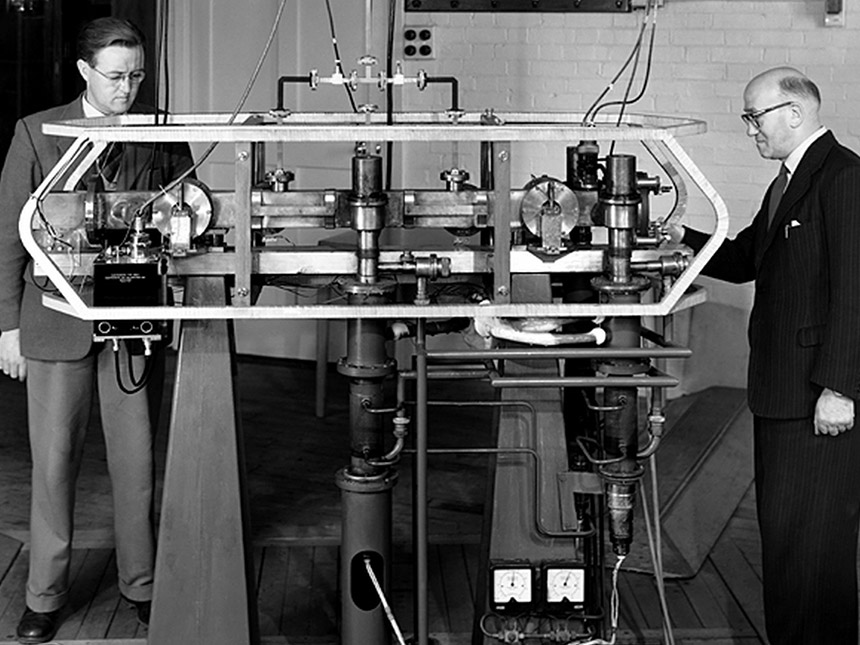

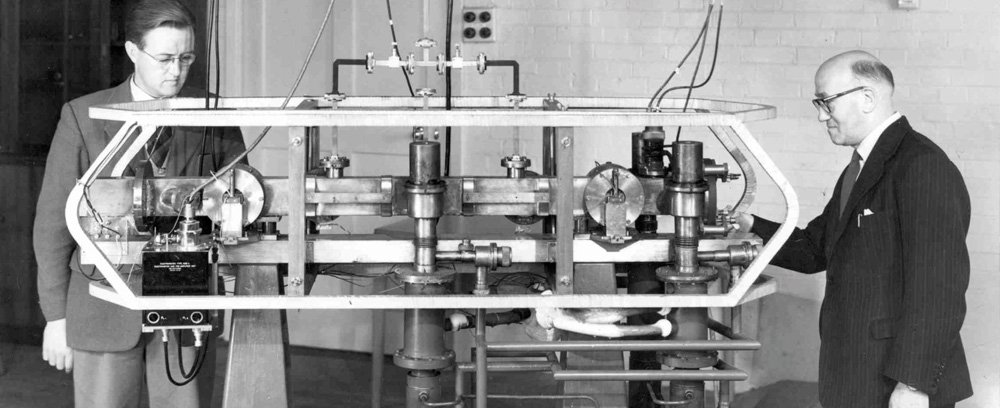

Louis Essen & Jack Perry with the first atomic clock in 1955. Image Source: National Physical Laboratory

Measuring distance, speed, even something as intangible as sound, I can sort of wrap my head around. But time flows in mysterious ways our minds often have trouble comprehending. At times it flies, while when we’d want it to the least, it appears to stand still. So, measuring it accurately understandably remains one of the greatest fascinations, and not just for such philosophical reasons, but scientific ones as well. The most accurate of all timekeepers, the Atomic Clock, is turning 62 today, so let’s look back at how it began ticking and buzzing away on June 3, 1955.

The Early Beginnings

Thousands of years ago, it all started with the most simple of observations, like the repetition of days and nights, or the periodic succession of seasons – easy enough to keep track of. Everything else, like weeks, months, or hours and seconds were much more challenging to accurately quantify. I am working on an article that will discuss the history of time and timekeeping from a watch enthusiast’s perspective, so let us now skip those few thousand words (and years) and get to how atomic timekeeping came to be.

Time, by definition, is the indefinite, continued progress of existence and events that occur in apparently irreversible succession from the past through the present to the future. An operational definition of time comes from observing a certain number of repetitions of a standard cyclical event (like the passage of a free-swinging pendulum) which in turn constitutes one standard unit. In other words, to measure time, we need to split it up into equally long and repetitive events and then count said events.

A second used to be defined as 1/86,400 of the mean solar day, but irregularities in the Earth’s rotation make this measurement of time highly imprecise. The more accurate time we want to keep, the smaller and more consistently repetitive segments we have to split it into.

The idea of using atomic transitions to measure time was suggested by Lord Kelvin in 1879, but from idea to realization, it was still a very long way to go. As we will see, magnets and magnetism will play a role as well, so we’ll mention here that it was Isidor Rabi, who further developed the theory behind magnetic resonance, to first suggest in 1945 that atomic beam magnetic resonance might be used as the basis of a clock.

The first ever atomic clock came to be shortly after, when the U.S. National Bureau of Standards (NBS, now NIST) built an ammonia maser device in 1949. Funnily enough – but perhaps not surprisingly – it was less accurate than existing quartz clocks and was created more as a demonstration of the concept.

The First Practical Atomic Clock By Louis Essen & Jack Perry

British physicist Louis Essen earned his PhD and Doctor of Science from the University of London before becoming interested in finding ways to move timekeeping away from traditional definitions expressed in terms of the period of the Earth’s rotation.

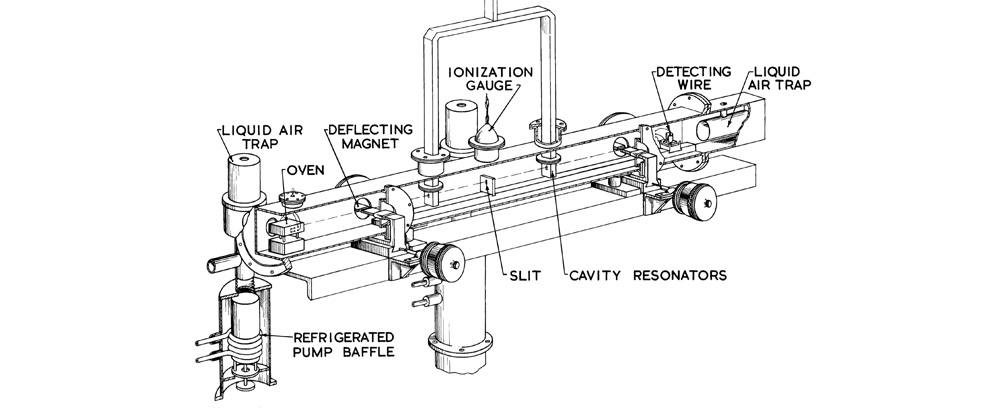

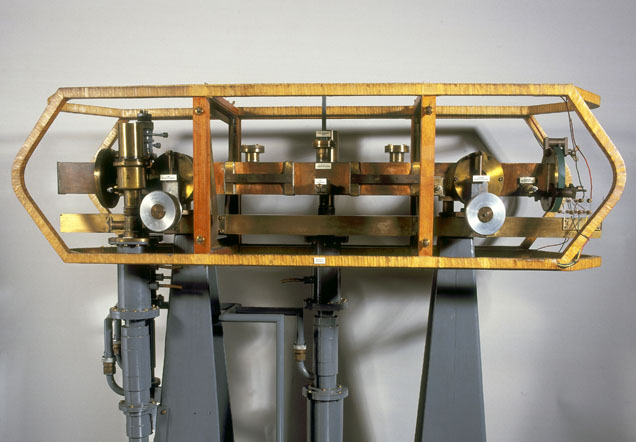

Fascinated by the possibility of using the frequency of atomic spectra to improve time measurement, he learned that the feasibility of measuring time using caesium as an atomic reference had already been demonstrated by the NBS. In 1955, in collaboration with Jack Parry, he developed the first practical atomic clock by integrating the caesium atomic standard with conventional quartz crystal oscillators to allow calibration of existing time-keeping.

By using microwaves to excite electrons within atoms of caesium from one energy level to another, Essen was able to stabilize the microwaves at a precise and reproducible frequency. A lot like the swinging of a pendulum, Essen’s prototype atomic clock relied on this frequency to keep track of the passing of time.